Mastering Multimodal AI Literacy: The Ultimate 2026 Student Guide

In 2026, the definition of “AI Literacy” has shifted. It is no longer enough to know how to write a text prompt. Today’s academic and professional landscape demands Multimodal AI Literacy—the ability to seamlessly interact with AI across text, voice, vision, and video. For students on StudyWithAI, mastering these “cross-sensory” workflows is the key to cutting study time in half and future-proofing your career.

I. What is Multimodal AI Literacy?

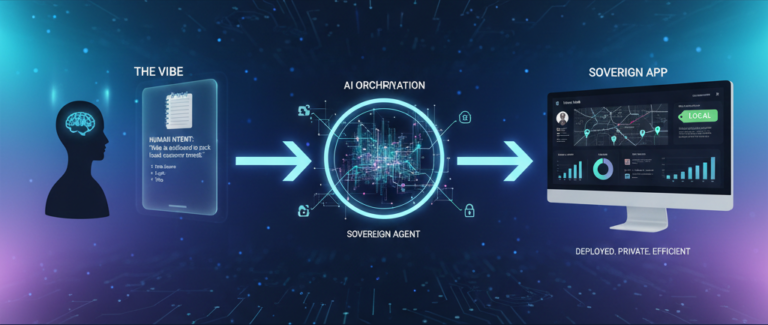

Multimodal AI literacy is the competence to use AI systems that process multiple types of data inputs simultaneously. Unlike early unimodal bots (text-only), multimodal models like Google Gemini 3 Flash and GPT-5 can “see” a diagram you draw, “hear” your explanation of it, and “write” a corrected version in real-time.

Key Stat: Research in 2026 shows that students using multimodal AI workflows improve their complex problem-solving accuracy by 23.6% compared to those using text-only tools.

II. Top 2026 Multimodal Tools for Students

To build an elite study stack, you need tools that excel in specific sensory reasoning. Here are the 2026 leaders:

| Tool | Primary Modality | Best Academic Use Case |

| Google Gemini 3 | Video & Vision | Scanning 50-page textbooks or live lectures for instant mind-mapping. |

| Qwen2.5-VL | Complex Diagrams | Analyzing intricate engineering blueprints and mathematical charts. |

| NotebookLM | Audio Synthesis | Turning “Grounded” PDF sources into a 2-person study podcast. |

| GLM-4.5V | 3D Spatial Reasoning | Visualizing organic chemistry or architectural models in AR. |

III. How to Build a Multimodal Study Workflow

To rank at the top of your class (and Google), follow this “Input-Fusion” method:

Step 1: The Vision Capture

Use your smartphone or AR glasses to capture visual data. Whether it’s a messy whiteboard or a complex biology diagram, let the AI “see” the raw data.

- Pro Tip: Use Socratic by Google to take a photo of a math problem for a step-by-step visual breakdown.

Step 2: The Voice Layer

Don’t just read the summary. Engage in a Socratic Debate using voice mode. Ask the AI: “I see the diagram says X, but my notes say Y. Why is there a contradiction?” This forces the AI to use multimodal reasoning to bridge the gap between your notes and the textbook.

Step 3: Grounded Synthesis

Finally, use the AI to generate a Multimodal Narrative. Instead of a plain essay, have the AI help you create a presentation that includes generated infographics and voice-over scripts based strictly on your uploaded “Second Brain” sources.

IV. Career Readiness: Why Employers Demand Multimodal Skills

In 2026, “AI fluency” is a basic career requirement. Employers are no longer looking for people who can “use AI”; they want AI Collaborators.

- Operational Adaptability: Can you use an AI agent to analyze a live video feed of a warehouse or a surgical procedure and provide insights?

- Ethical Evaluation: Can you detect bias in how an AI interprets visual data versus text data?

- Cross-Functional Communication: Can you translate AI-generated data visualizations into a spoken business strategy?

V. Academic Integrity in 2026

As we embrace multimodal tools, remember: AI is a co-pilot, not the captain. 1. Transparency: Disclose when you use AI Vision to analyze data.

2. Verification: Always check AI-generated citations.

3. Originality: Use AI to organize your thoughts, but ensure the final synthesis is your own.

The Ultimate AI Second Brain for Students: Build Your Infinite Memory in 2026